DATA INFRASTRUCTURE

Scale smarter with a technology architecture that balances centralized efficiency, decentralized flexibility, and the agility to adopt new innovations—backed by expert design and execution support

G4 specializes in helping you scale, secure, and optimize your data infrastructure across both cloud and on-premises environments. Our approach focuses on unification of siloed solutions into a cohesive digital ecosystem tailored to your unique needs. By aligning your technology architecture with your business objectives, we ensure your data systems deliver the reliability, security, agility, and cost optimization you need to thrive. We partner with you to:

1

Identify scaling points to design a technology architecture to support growth

2

Unify and centralize solutions to maximize value and minimize inefficiencies

3

Implement modular designs, ensuring agility to adopt the latest technological innovations

4

Decommission legacy systems and migrate data seamlessly while ensuring business continuity

5

Provide FinOps capabilities to optimize costs and resource utilization

Our cross-functional teams comprised of strategy leaders, technical program and product managers, architects, engineers, analysts, and data scientists work closely with your technology, business, and governance stakeholders to deliver critical projects on time and under budget.

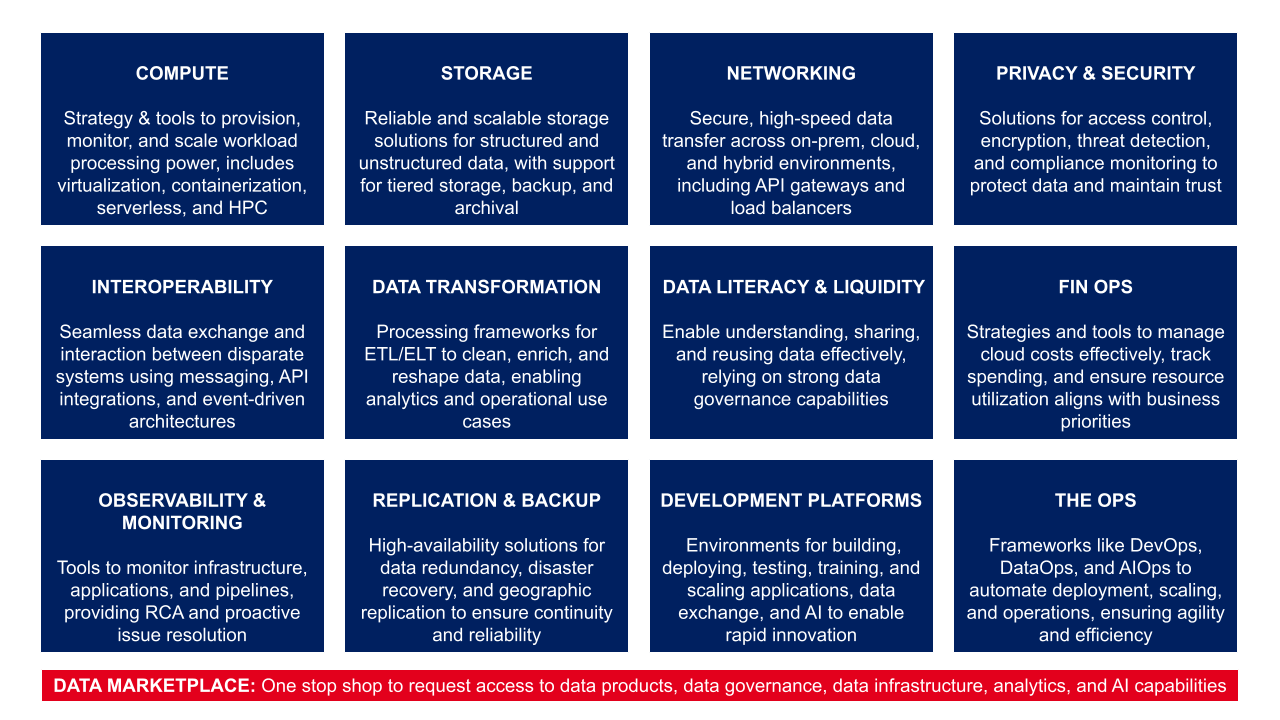

G4 Data Infrastructure Areas of Focus

What to expect from a Data Infrastructure Engagement

Workstream 1: Design Infrastructure Architecture

We believe a robust architecture framework is the cornerstone of a scalable, flexible, secure, and cost-optimized data infrastructure

Key Activities

Understand Current State: Review existing architecture and conduct audits of compute, storage, and network configurations. Benchmark performance and regulatory compliance adherence against industry standards to identify gaps and vulnerabilities

Define Future Infrastructure Needs and Requirements: Document functional and non-functional requirements by mapping data flows and workloads based on your data strategy/data initiatives. Identify and group related components to make it possible to reduce latency and incorporate redundancy for high availability and fault tolerance

Design Infrastructure Architecture: Define architecture with reusable templates, modular design frameworks, and auto-scaling policies to support workload demands and future innovation. Assess and devise a plan to unify siloed solutions, balancing flexibility, cost optimization, performance, and security

Optimize Design: Validate reliability and scalability through testing, simulations, and phased scaling plans, ensuring the data infrastructure can handle surges seamlessly and maintain resilience

Note: This focuses on the design of your application/workload architectures. We can also support delivery cycle architecture design when engaged for execution support as part of an implementation, migration, or decommissioning project

Data infrastructure technology architecture design and delivery is ideally suited for AI agents to manage key aspects. We can help you integrate AI agents into these workstreams to streamline the management and maintenance of architecture design. This enhances efficiency while complementing your architects' and engineers' efforts, enabling them to focus on high-value, strategic work. Explore more in our Analytics and AI offerings

Workstream 2: FinOps Design

We help you implement FinOps to take control of your IT spend by aligning financial decisions with your business objectives. Our expertise ensures you gain full visibility into costs, optimize resource utilization, and build collaboration across finance, IT, and business teams

Key Activities

Establish Resource Tagging and Cost Attribution: Implement robust policies for tagging resources and create cost-allocation frameworks that ensure transparency and accuracy across departments.

Establish Budgeting and Cost Governance: Design of budgeting mechanisms and governance structures to set clear financial guidelines, enforce spending limits, and ensure alignment with both short-term and long-term business goals

Enable Real-Time Cost Monitoring and Forecasting: Implement tools and strategies for real-time budget tracking, forecasting, and reporting. As part of this, G4 will train your teams on FinOps principles, best practices, forecasting, and reporting

Optimize Resource Utilization and Cost: Identify underutilized or idle resources and design reallocation strategies that improve efficiency and eliminate waste, ensuring maximum ROI for your data infrastructure

Workstream 3: Implementation and Migration Support

We provide execution support to ensure seamless transitions to new technologies across cloud and on-premises platforms, managing both vendor solution implementations and bespoke system builds

Key Activities

Technology Alignment and Planning: Align new technology with business objectives through stakeholder workshops, requirements validation, and success criteria definition. Evaluate the existing technology landscape to identify dependencies, risks, and readiness to develop a migration plan

Implementation Management: Manage timelines, dependencies, and stakeholder expectations while mitigating risks and ensuring transparent communication across teams to avoid delays

Data and Application Migration: Execute migrations using automation tools to minimize downtime, maintain data integrity, and validate accuracy through rigorous testing with rollback strategies in place. G4 offers proprietary accelerators to assist with automating processes

Post-Migration Optimization: Provide comprehensive documentation, resolve any issues, and optimize the performance of the new system while training teams for long-term management success

Workstream 4: Decommissioning Legacy Systems

We support the retirement of legacy systems to reduce costs, minimize risks, and ensure seamless transitions. G4 employs an approach that streamlines the process while safeguarding business-critical operations

Key Activities

Map legacy system processes, dependencies, customer journeys, and compliance requirements to identify critical data and workflows to preserve

Create decommissioning roadmaps that define milestones, incorporate secure data archiving, validate processes through rigorous testing, and ensure a smooth transition for impacted teams

Automate redundant workflows to minimize manual intervention and consolidate operations to remove unnecessary complexity (as needed)

Engage G4 to manage and support legacy applications, ensuring uninterrupted business continuity while your team focuses on new innovations. As part of this, G4 captures and documents lessons learned to provide actionable insights to your teams to fuel future innovation

Shut down the legacy system by retiring redundant functionalities and reallocating resources to higher-value initiatives

Note: For large-scale data infrastructure projects, we recommend a partnership with G4 to provide comprehensive support. For smaller-scale projects, we can explore an Enablement: Elastic Workforce model, with our resources managed directly by your team.